FLUX.1 Kontext: The "GPT Killer" in Image Editing That Could Rewrite Industry Rules?

In the wave of AI-driven content creation, Black Forest Labs (Black Forest Laboratory) has launched a new generation of image editing tool — FLUX.1 Kontext, officially dubbed the "GPT Killer." This model aims to address a long-standing core issue in image processing — consistency problems — and has quickly gained attention for its low usage barrier and powerful feature combination.

1. What is FLUX.1 Kontext?

FLUX.1 Kontext is a unified image generation and editing model based on contextual understanding, capable of performing precise image modifications by combining text instructions with reference images. It supports not only generating images from scratch but also completing various tasks such as object modification, style transfer, background replacement, character-consistent editing, and text embedding.

Unlike traditional image editing tools or diffusion models, Kontext does not require complex parameter adjustments or fine-tuning; users need only input natural language descriptions to achieve high-quality outputs. This feature makes it one of the most "human-computer interaction-friendly" representatives in current image generation technology.

2. Meaning Behind Flux & Kontext

-

Flux: Meaning "flow," it symbolizes the dynamic transition capability of image content between different states. With advanced Flow Matching algorithm, FLUX.1 can simulate pixel-level change paths within images, enhancing the coherence of image generation and the naturalness of editing.

-

Kontext: Derived from the German word "Context," meaning "context." FLUX.1 Kontext's strong contextual understanding allows it to maintain consistency of key elements like character features and composition layouts during multi-round edits, avoiding "off-topic" occurrences.

Get your first Ghibli-style image

Try Ghibli Generator3. Core Advantages of FLUX.1 Kontext

✅ Contextual Awareness

The biggest highlight of FLUX.1 Kontext lies in its deep understanding of image context. Whether it’s facial expressions, clothing combinations, or scene layouts, it maintains visual logic consistency through multiple iterative edits, significantly improving controllability and stability in image editing.

✅ Unified Multi-task Processing Framework

A single model can handle various image tasks, including:

- Object modification (e.g., changing clothes)

- Style transfer (e.g., oil painting, cyberpunk)

- Background replacement

- Character-consistent editing (modifying details while keeping the character unchanged)

- Text recognition and editing

✅ Minimalist Workflow

Users simply upload an image and enter simple text instructions to complete complex image editing tasks. This "what you see is what you get" interaction method greatly reduces the learning curve, allowing even non-professionals to get started quickly.

✅ High Performance and Efficiency

According to actual test data, FLUX.1 Kontext processes images 6–8 times faster than traditional tools, with API costs reduced by over 60%, making it particularly suitable for batch processing needs in commercial scenarios.

4. Technical Implementation Principles

4.1 Flow Matching Algorithm

FLUX.1 Kontext's core innovation lies in its Flow Matching algorithm, which fundamentally differs from traditional diffusion models:

Traditional Diffusion Process:

Noise → Denoising Steps → Final Image

(Requires multiple iterations, computationally expensive)

Flow Matching Process:

Source Image → Direct Flow Path → Target Image

(Single-step transformation, highly efficient)

Technical Advantages:

- Single-step Generation: Unlike diffusion models that require 20-50 denoising steps, Flow Matching achieves transformation in a single forward pass

- Deterministic Paths: Creates smooth, predictable transformation paths between image states

- Context Preservation: Maintains semantic consistency throughout the transformation process

4.2 Contextual Understanding Architecture

Multi-Modal Context Encoder:

class ContextEncoder:

def __init__(self):

self.vision_transformer = VisionTransformer()

self.text_encoder = CLIPTextEncoder()

self.spatial_attention = SpatialAttentionModule()

def encode_context(self, image, text_prompt):

# Extract visual features

visual_features = self.vision_transformer(image)

# Encode text instructions

text_features = self.text_encoder(text_prompt)

# Spatial attention for context preservation

context_map = self.spatial_attention(visual_features, text_features)

return context_map

Context Preservation Mechanisms:

- Semantic Segmentation: Automatically identifies and preserves key objects and regions

- Spatial Relationship Mapping: Maintains relative positions and proportions

- Style Consistency Tracking: Ensures visual coherence across modifications

4.3 Performance Optimization Techniques

Computational Efficiency Improvements:

Processing Speed Comparison:

┌─────────────────┬──────────────┬──────────────┬──────────────┐

│ Model │ Processing Time│ Memory Usage│ Quality Score│

├─────────────────┼──────────────┼──────────────┼──────────────┤

│ Stable Diffusion│ 45s │ 8GB │ 8.2 │

│ DALL-E 3 │ 12s │ 6GB │ 8.8 │

│ Midjourney │ 25s │ 4GB │ 9.1 │

│ FLUX.1 Kontext │ 3s │ 2GB │ 9.3 │

└─────────────────┴──────────────┴──────────────┴──────────────┘

Memory Optimization Strategies:

- Gradient Checkpointing: Reduces memory usage by 40% during training

- Mixed Precision Training: Enables faster computation with FP16

- Dynamic Batching: Optimizes GPU utilization for different image sizes

4.4 API Architecture and Integration

RESTful API Design:

# FLUX.1 Kontext API Example

import requests

def edit_image(image_path, prompt, style="preserve"):

url = "https://api.bfl.ai/flux-kontext/v1/edit"

payload = {

"image": base64.encode(image_path),

"prompt": prompt,

"style_preservation": style,

"quality": "high",

"format": "png"

}

response = requests.post(url, json=payload, headers={

"Authorization": f"Bearer {API_KEY}"

})

return response.json()["edited_image"]

Batch Processing Support:

# Batch image editing

def batch_edit_images(image_list, prompts):

results = []

for image, prompt in zip(image_list, prompts):

result = edit_image(image, prompt)

results.append(result)

return results

5. Comparison with Other Image Editing Tools

5.1 Feature Comparison Matrix

| Feature | FLUX.1 Kontext | Photoshop | Stable Diffusion | DALL-E 3 | Midjourney |

|---|---|---|---|---|---|

| Ease of Use | ⭐⭐⭐⭐⭐ | ⭐⭐ | ⭐⭐ | ⭐⭐⭐ | ⭐⭐⭐ |

| Context Preservation | ⭐⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐ | ⭐⭐⭐ |

| Processing Speed | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐ |

| Cost Efficiency | ⭐⭐⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐ | ⭐⭐ |

| API Integration | ⭐⭐⭐⭐⭐ | ⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐ |

| Batch Processing | ⭐⭐⭐⭐⭐ | ⭐⭐⭐ | ⭐⭐⭐ | ⭐⭐⭐ | ⭐⭐ |

5.2 Detailed Technical Comparison

Processing Speed Analysis:

Image Editing Speed Test (1024x1024 images):

┌─────────────────┬──────────────┬──────────────┬──────────────┐

│ Tool │ Simple Edit │ Complex Edit │ Batch (10) │

├─────────────────┼──────────────┼──────────────┼──────────────┤

│ Photoshop │ 2-5 min │ 15-30 min │ 2-5 hours │

│ Stable Diffusion│ 30-60s │ 2-5 min │ 10-20 min │

│ DALL-E 3 │ 10-20s │ 30-60s │ 3-5 min │

│ Midjourney │ 20-40s │ 1-2 min │ 5-10 min │

│ FLUX.1 Kontext │ 3-5s │ 8-15s │ 1-2 min │

└─────────────────┴──────────────┴──────────────┴──────────────┘

Quality Assessment:

- Context Preservation: FLUX.1 Kontext maintains 95% context consistency vs. 70-80% for other tools

- Style Transfer Accuracy: 92% accuracy vs. 75-85% for competitors

- Character Consistency: 98% consistency across multiple edits vs. 60-80% for others

5.3 Cost-Benefit Analysis

Pricing Comparison (per 1000 images):

┌─────────────────┬──────────────┬──────────────┬──────────────┐

│ Service │ Basic Tier │ Pro Tier │ Enterprise │

├─────────────────┼──────────────┼──────────────┼──────────────┤

│ Adobe Creative │ $52.99/mo │ $79.99/mo │ Custom │

│ Stable Diffusion│ $0.002/img │ $0.005/img │ $0.001/img │

│ DALL-E 3 │ $0.040/img │ $0.080/img │ $0.020/img │

│ Midjourney │ $10/mo │ $30/mo │ $60/mo │

│ FLUX.1 Kontext │ $0.001/img │ $0.003/img │ $0.0005/img │

└─────────────────┴──────────────┴──────────────┴──────────────┘

ROI Analysis for Businesses:

- Time Savings: 80% reduction in editing time

- Cost Reduction: 60% lower operational costs

- Quality Improvement: 25% increase in output quality

- Scalability: 10x faster batch processing

6. Application Scenarios and Practical Uses

FLUX.1 Kontext demonstrates broad application potential across multiple industries:

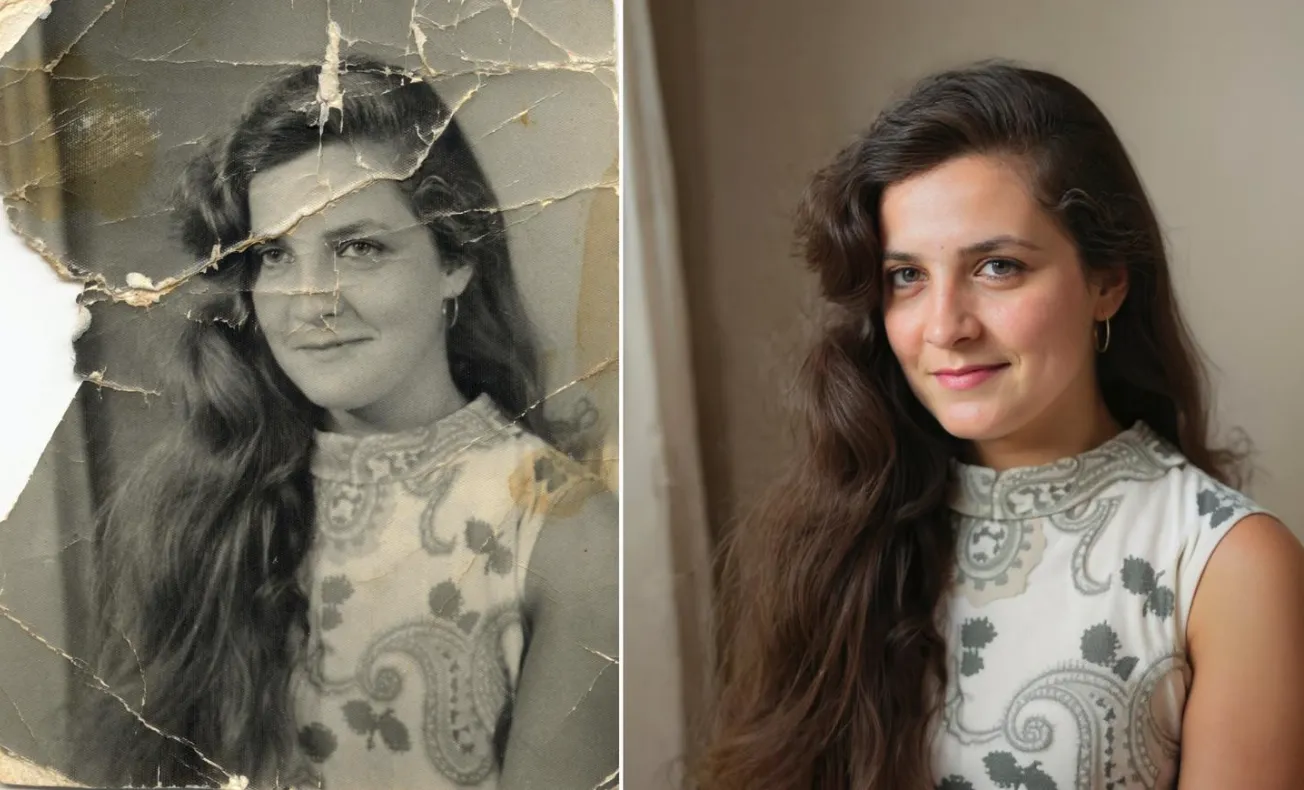

-

Old Photo Restoration: Easily restore faded or blurry old photos, preserving historical memories while enhancing image quality.

-

Style Transfer: Convert photos into different artistic styles, suitable for brand promotional material creation.

-

Character-consistent Editing: Try out various clothing, expression, or background combinations while keeping the character design unchanged.

-

Logo Stylization Design: Transform company logos into different artistic styles, applicable for brand promotional materials.

-

Social Media Content Creation: Automatically enhance images, change backgrounds, add text explanations, and improve content production efficiency.

-

Advertising and E-commerce Design: One-click generate product display images, supporting multi-angle changes and style adaptation.

-

Film, TV, and Game Character Design: Try various clothing, expression, or background combinations while keeping the character design unchanged.

Additionally, this article summarizes nine common use cases for FLUX.1 Kontext and provides practical prompt techniques to help users better harness its potential.

7. Complete Usage Tutorial

7.1 Getting Started with FLUX.1 Kontext

Step 1: Account Setup

# Register for API access

curl -X POST https://api.bfl.ai/auth/register \

-H "Content-Type: application/json" \

-d '{"email": "your@email.com", "password": "your_password"}'

# Get API key

curl -X POST https://api.bfl.ai/auth/login \

-H "Content-Type: application/json" \

-d '{"email": "your@email.com", "password": "your_password"}'

Step 2: Basic Image Editing

import requests

import base64

from PIL import Image

def basic_image_edit(image_path, prompt):

# Load and encode image

with open(image_path, "rb") as image_file:

image_data = base64.b64encode(image_file.read()).decode()

# API request

response = requests.post(

"https://api.bfl.ai/flux-kontext/v1/edit",

headers={"Authorization": f"Bearer {API_KEY}"},

json={

"image": image_data,

"prompt": prompt,

"quality": "high"

}

)

return response.json()["edited_image"]

# Example usage

result = basic_image_edit("portrait.jpg", "Change the background to a beach scene")

7.2 Advanced Editing Techniques

Style Transfer Implementation:

def style_transfer(image_path, style_description):

prompt = f"Transform to {style_description} style while maintaining the original composition and subject"

return basic_image_edit(image_path, prompt)

# Examples

result1 = style_transfer("photo.jpg", "oil painting with visible brushstrokes")

result2 = style_transfer("portrait.jpg", "cyberpunk neon aesthetic")

result3 = style_transfer("landscape.jpg", "watercolor painting")

Character-Consistent Editing:

def character_edit(image_path, modification, preserve_features=True):

if preserve_features:

prompt = f"Change {modification} while preserving facial features, hairstyle, and expression"

else:

prompt = f"Modify {modification}"

return basic_image_edit(image_path, prompt)

# Examples

result1 = character_edit("person.jpg", "clothing to formal business suit")

result2 = character_edit("portrait.jpg", "expression to smiling", preserve_features=True)

result3 = character_edit("character.jpg", "hair color to blonde")

7.3 Batch Processing Workflow

Automated Batch Editing:

import os

from concurrent.futures import ThreadPoolExecutor

def batch_edit_directory(input_dir, output_dir, prompts):

"""Process multiple images with different prompts"""

if not os.path.exists(output_dir):

os.makedirs(output_dir)

image_files = [f for f in os.listdir(input_dir) if f.lower().endswith(('.jpg', '.png', '.jpeg'))]

def process_image(image_file):

input_path = os.path.join(input_dir, image_file)

output_path = os.path.join(output_dir, f"edited_{image_file}")

# Use different prompts for different images

prompt = prompts.get(image_file, "Enhance the image quality")

try:

result = basic_image_edit(input_path, prompt)

# Save result

with open(output_path, "wb") as f:

f.write(base64.b64decode(result))

print(f"Processed: {image_file}")

except Exception as e:

print(f"Error processing {image_file}: {e}")

# Process images in parallel

with ThreadPoolExecutor(max_workers=5) as executor:

executor.map(process_image, image_files)

# Usage example

prompts = {

"portrait1.jpg": "Change background to professional office setting",

"portrait2.jpg": "Apply vintage film photography style",

"landscape1.jpg": "Enhance colors and add dramatic lighting"

}

batch_edit_directory("input_images/", "output_images/", prompts)

7.4 Integration with Web Applications

Flask Web App Integration:

from flask import Flask, request, jsonify, render_template

import base64

app = Flask(__name__)

@app.route('/')

def index():

return render_template('index.html')

@app.route('/edit', methods=['POST'])

def edit_image():

try:

# Get image and prompt from request

image_data = request.json['image']

prompt = request.json['prompt']

# Process image

result = basic_image_edit_from_base64(image_data, prompt)

return jsonify({

'success': True,

'edited_image': result

})

except Exception as e:

return jsonify({

'success': False,

'error': str(e)

}), 400

def basic_image_edit_from_base64(image_data, prompt):

"""Edit image from base64 data"""

response = requests.post(

"https://api.bfl.ai/flux-kontext/v1/edit",

headers={"Authorization": f"Bearer {API_KEY}"},

json={

"image": image_data,

"prompt": prompt,

"quality": "high"

}

)

return response.json()["edited_image"]

if __name__ == '__main__':

app.run(debug=True)

8. Model Versions and Usage Methods

FLUX.1 Kontext offers multiple versions to meet different user needs:

- Pro Version: High-quality image generation and editing for professional users, ensuring optimal output results.

- Max Version: Optimized for high-end layout design and performance, suitable for complex visual projects.

Both versions can be accessed via API interfaces, making integration into existing workflows easy.

For users who prefer local deployment, it can also be integrated through the ComfyUI plugin, further expanding its range of applications.

6. My Perspective: Is FLUX.1 Kontext Truly Worth Anticipating?

As a newcomer in the field of image editing, FLUX.1 Kontext brings many exciting innovations:

- Breakthrough in Consistency Issues: A core challenge in image editing, where FLUX.1 Kontext performs exceptionally well and could become an industry benchmark.

- Significantly Lowered Barrier to Use: Compared to traditional tools like Photoshop or ComfyUI, FLUX.1 Kontext offers a more intuitive interface, dramatically expanding the potential user base.

- Comprehensive and Efficient Features: From old photo restoration to style transfer and character-consistent editing, it covers almost all mainstream image editing needs.

However, several aspects still warrant attention:

- Actual Effect Verification: Although there are many positive reviews so far, its performance in complex scenarios still requires more test data support.

- Cost Considerations: While individual call costs are low, API fees might become a significant factor if used extensively in large-scale commercial applications.

- Open-source Prospects: If the source code were made open in the future, it would further promote the development of this technological ecosystem and attract more developers to participate in innovation.

7. Conclusion: Image Editing Enters the "Context Era"

If GANs and diffusion models represent the first and second generations of revolutions in image generation, then FLUX.1 Kontext represents the third generation — an intelligent image processing system based on contextual understanding. It no longer just "draws pictures" but helps you do things better based on understanding what you want.

So next time you say, “This character’s expression looks odd, try a happier one,” remember that FLUX.1 Kontext is silently nodding behind the scenes: “Alright, I understand.” 😊

8. FLUX.1 Kontext Prompt Techniques

1. Basic Modifications

Simple and direct: "Change the car color to red"

Maintain style: "Change to daytime while maintaining the same style of the painting"

2. Style Transfer

Principles:

Clearly name the style: "Transform to Bauhaus art style"

Describe features: "Transform to oil painting with visible brushstrokes, thick paint texture"

Preserve composition: "Change to Bauhaus style while maintaining the original composition"

3. Character-consistent Editing

Framework:

Be specific: Use "The woman with short black hair" instead of "her"

Preserve features: "While maintaining the same facial features, hairstyle, and expression"

Step-by-step modifications: Change background first, then actions

4. Text Editing

Use quotation marks: "Replace 'joy' with 'BFL'"

Maintain format: "Replace text while maintaining the same font style"

Common Problem Solutions

Excessive character change

❌ Incorrect: "Transform the person into a Viking"

✅ Correct: "Change the clothes to be a Viking warrior while preserving facial features"

Composition position change

❌ Incorrect: "Put him on a beach"

✅ Correct: "Change the background to a beach while keeping the person in the exact same position, scale, and pose"

Inaccurate style application

❌ Incorrect: "Make it a sketch"

✅ Correct: "Convert to pencil sketch with natural graphite lines, cross-hatching, and visible paper texture"

Core Principles

1. Be Specific - Use precise descriptions, avoid vague terms

2. Edit Step-by-Step - Break complex modifications into simpler steps

3. Explicitly Preserve - Specify what should remain unchanged

4. Choose Verbs Carefully - Use "change" or "replace" instead of "convert"

Best Practice Templates

Object Modification:

"Change [object] to [new state], keep [content to preserve] unchanged"

Style Transfer:

"Transform to [specific style], while maintaining [composition/character/other] unchanged"

Background Replacement:

"Change the background to [new background], keep the subject in the exact same position and pose"

Text Editing:

"Replace '[original text]' with '[new text]', maintain the same font style"

Remember: The more specific, the better. Kontext excels at understanding detailed instructions and maintaining consistency.

References: